Surprising fact: studies show that cutting runtimes by 50% can double research throughput in months, not years.

This roundup helps technical buyers compare a wide range of solutions for computing and analytics. It clarifies what “high-performance software” means for practical results today and how gains translate to measurable KPIs.

We synthesize performance, features, and deployment factors from credible sources to give a clear view of what matters for time-to-results and total value. The guide compares open-source solvers, GPU-accelerated platforms, and turnkey cloud HPC so teams can map options to needs.

Expect focused profiles that highlight reduced runtimes, improved scalability, and better use of existing data across research and industry. Each product note includes practical takeaways on implementation paths and ecosystem fit to speed confident selection.

Key Takeaways

- Compare performance, cost, and deployment together to see real value.

- Open-source, GPU, and cloud HPC each suit different workloads.

- Faster time-to-results drives research and engineering impact.

- Security, integration, and cost control are essential evaluation criteria.

- Profiles include practical implementation tips for U.S. teams.

Why High-Performance Software Matters for Commercial Outcomes Today

Faster compute directly shortens development cycles, turning ideas into marketable products sooner. Time to insight maps straight to revenue: quicker analysis speeds decisions, trims cost, and sharpens competitive positioning across industry sectors.

Modern systems blend simulation, analytics, and AI to solve complex problems at scale. That mix shortens development, reduces engineering risk, and lets teams explore more scenarios with higher fidelity without missing deadlines.

Improved performance also lowers opportunity cost and operational risk on large projects. Organizations use efficient computing to meet energy and sustainability goals while keeping results consistent.

“Speed that preserves accuracy lets product teams ship faster with fewer costly reworks.”

Common challenges—budget limits, skills gaps, and integration complexity—can be mitigated by well-architected stacks and partner ecosystems. The business case is clear: fewer bottlenecks, higher utilization, and better outcomes under tight time constraints.

This article offers a practical view into solutions that turn performance gains into measurable commercial impact and durable competitive advantage.

Understanding High-Performance Software and HPC Solutions

Modern compute stacks weave together modeling, simulation, and AI to run end-to-end research and industrial pipelines. This convergence shortens time-to-insight and lets teams move from prototypes to production with fewer cycles.

From simulation to AI: Unifying workloads across research and industry

What unification looks like: simulation outputs feed machine learning, and trained models speed later runs while keeping fidelity. NVIDIA reports GPUs accelerate hundreds of applications across climate, energy, finance, engineering, and life sciences, showing broad practical value.

Where high performance meets energy efficiency and scalability

Architectural balance matters: right-size CPU, GPU, or hybrid tiers to match the problem. Domain scientists and engineers share platforms that support batch simulations and interactive analysis.

- Characterize workloads first—models and simulations vary in parallelism and I/O.

- Choose GPU acceleration for dense numerical kernels; prefer CPU-only for light parallel tasks.

- Prioritize portability and standards to move compute between on-prem and cloud.

“Scalability must cover problem size, parallelism, and data volume to run reliably from test to production.”

What’s next: the roundup maps these concepts to open-source solvers, GPU platforms, and cloud HPC offerings so buyers can match solutions to goals.

Buying Criteria: How to Evaluate High-Performance Software

Select evaluation criteria that tie measured gains to business goals and real workloads, not vendor claims.

Performance on real-world workloads

Design a performance-first plan that runs representative modeling, simulation, and AI jobs. Measure wall-clock speedups, throughput, and variance across repeated runs. Compare results on your data to avoid misleading synthetic benchmarks.

Scalability and elasticity

Test CPU and GPU tiers for parallel efficiency, queueing behavior, and autoscaling policies in cloud contexts. Validate how systems handle burst loads and resource churn to ensure predictable time-to-results.

Total cost and operational complexity

Weigh licensing, subscription, or pay-per-use models against maintenance burden. Factor in onboarding, CI/CD integration, and job scheduler compatibility to estimate real operational effort.

Security, compliance, and governance

Assess encryption, access controls, and audit readiness. Confirm data governance fits regulatory needs and that observability tools enable continuous tuning and cost-performance optimization.

- Validate portability and standards-compliant APIs to reduce lock-in.

- Check documentation, vendor SLAs, community support, and roadmaps for maintainability.

- Agree on acceptance criteria and run a proof-of-value that tests hardware, workloads, and governance controls before scale-up.

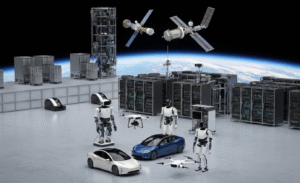

Product Roundup Overview: A Wide Range of Computing Solutions

To simplify selection, we separate offerings into three practical categories so teams can match tools to real R&D needs.

Open-source solver engines for deterministic optimization

Open solvers focus on LP, QP, and MIP problems used in planning, logistics, and scheduling applications. They offer predictable results and low licensing barriers, making them ideal for constrained optimization in finance and operations.

Example: HiGHS delivers MIT-licensed solvers with multi-language bindings that speed adoption across teams.

GPU-accelerated platforms powering scientific computing

GPU platforms bring the power to run dense modeling, simulation, and AI convergence at scale. NVIDIA highlights 700+ GPU-optimized applications across climate, energy, life sciences, finance, and engineering.

These stacks help researchers and engineers move from prototype to production faster by accelerating numeric kernels and visualization workflows.

Cloud-native HPCaaS for engineering and R&D at scale

HPCaaS unifies job orchestration, cost control, and security while broadening access to diverse hardware. Rescale, for example, pairs orchestration with 1,250+ integrations for easier toolchain and data pipeline management.

How to choose: map the range of solutions to your industry needs—risk models in finance, climate and medicine research, multiphysics engineering—and weigh integration, APIs, and multi-tenant security.

“This roundup contrasts capabilities, performance characteristics, and deployment trade-offs to give a compact view for clear decision-making.”

HiGHS: Open-Source High Performance Optimization for LP, QP, and MIP

For teams that embed optimization into models and simulation pipelines, HiGHS offers a compact, production-ready solver suite. It is designed to solve large, sparse linear programming (LP), quadratic programming (QP), and mixed-integer programming (MIP) problems with industrial-grade speed and reliability.

Core capabilities

Algorithmic breadth: HiGHS implements a dual revised simplex LP solver, a novel LP interior point solver, an active set QP solver, and a branch-and-cut MIP solver. These methods address complex constraints common in planning and scheduling.

Interfaces and portability

The project is written in C++11 and ships as a library plus stand-alone executables for Windows, Linux, and MacOS. Official bindings include C, C#, FORTRAN, Julia, and Python to fit diverse engineering and analytics toolchains.

- Build: source builds require CMake 3.15+; binaries support quick trials.

- Integration: embed the library in systems or call the executable from pipelines.

- License & community: MIT license, active documentation, newsletters, and workshops (Edinburgh, June 26–27, 2025).

“Huangfu & Hall (2018) provides the peer-reviewed foundation for HiGHS’ performance claims.”

Evaluation tips: benchmark representative models, validate solver tolerances, and confirm language bindings match your development environment. Typical uses include supply chain planning, energy systems, portfolio allocation, and experimental design.

Contact and support channels include highsopt@gmail.com, GitHub Sponsors, and the Linux Foundation for donations. Ongoing stewardship by Julian Hall and contributors keeps the project current for engineering teams.

NVIDIA HPC Solutions: Accelerated Computing for Science and Industry

NVIDIA’s accelerated stack powers dense modeling, AI-driven inference, and real-time visualization across research and industry pipelines.

Workloads: modeling, AI, and visualization

GPU acceleration targets simulations, model training, and interactive visualization in the same environment. This lets teams move from data ingestion to analysis without costly transfers.

Industry applications

Over 700 optimized applications span climate and weather, energy exploration, life sciences and medicine, finance, and engineering. That breadth speeds domain-specific adoption and reduces integration effort.

Innovation pipeline

Physics-informed machine learning and quantum circuit simulation now complement traditional simulations to tackle previously intractable problems.

- Evaluation tip: profile workloads, required precision, and scaling behavior before selecting GPUs and runtime stacks.

- Deployment: choose on-prem clusters, hybrid expansion, or cloud GPUs based on cost, control, and elasticity needs.

- Ecosystem: libraries and industry tooling cut integration time and accelerate results.

“Pairing GPUs with optimized stacks delivers consistent performance across diverse workloads, shrinking time-to-insight for engineering teams.”

Rescale Platform: Turnkey Cloud HPC, Data, and AI for R&D

Rescale centralizes orchestration, governance, and cost controls so R&D groups run complex workloads without heavy ops overhead. The platform combines compute, applications, and policy controls into a single pane for monitoring performance, spend, and security.

Full-stack orchestration, cost control, and security

Turnkey HPCaaS: automated provisioning, queueing, and policy enforcement reduce operational friction. Built-in budgeting and analytics help teams right-size resources and limit surprises on cloud bills.

Engineering data intelligence

Automatic metadata extraction and enrichment convert scattered simulation output into unified views. That digital thread surfaces actionable insights across projects and speeds design iteration.

AI with simulation data

Rescale supports training and deployment of physics-informed models using existing simulation data. Teams report orders-of-magnitude speedups for inference while keeping ~99% accuracy.

- 1,250+ ecosystem integrations ease adoption across a wide range of applications and systems.

- Cloud-native elasticity adapts to surges while preserving governance, encryption, and authentication controls.

- Supports design exploration, batch automation, and multi-step workflows to accelerate engineering loops.

“Customers such as NOV, Vertical Aerospace, and Daikin Applied cite faster cycles and higher productivity on Rescale.”

Fit: Ideal for organizations that need scalable HPC, unified data views, and controlled spend to speed design and simulation-driven decision making.

Pricing, Licensing, and TCO Considerations

Licensing choices and billing models shape whether speed gains translate to real return on investment. Start with clear goals: expected time savings, acceptable uptime, and who owns operational risk.

Open-source MIT licensing versus commercial subscriptions

Open-source (MIT): HiGHS is available under MIT on GitHub, minimizing procurement friction and licensing cost for trials and integrations.

Commercial: subscriptions and managed plans add SLAs, support, and enterprise controls useful for regulated deployments or large teams.

Balancing performance, time-to-results, and budget at scale

Build TCO from drivers, not invoices. Consider infrastructure consumption, administrative effort, developer productivity, and risk mitigation.

- Usage-based models align spend with actual computing consumption but require governance to avoid runaways.

- Hybrid strategies pair free tools like HiGHS with paid orchestration (Rescale) or GPU acceleration (NVIDIA) to span a broad range of needs.

- Budget pilots separately from production and add contingencies for workload spikes, data egress, and long-term storage tiers.

“Track utilization, queue times, and per-workload ROI to make cost and performance trade-offs visible.”

Recommendation: implement a cost-performance dashboard and vet vendors for billing transparency and portability before scaling.

Implementation Playbook: Deployment, Integration, and Operations

Start deployment with a clear decision tree that maps workloads to on-prem clusters, GPU-accelerated nodes, or cloud-native HPCaaS. This reduces risk and speeds validation for engineering and research teams.

On-prem, GPU-accelerated, or cloud-native: choosing the right architecture

Choose on-prem when governance, steady capacity, and control are top priorities. Pick GPU-accelerated nodes for dense modeling, simulation, AI, and visualization needs. Use cloud HPCaaS when elasticity, integrations, and managed security matter most.

Toolchain integration, data pipelines, and compliance workflows

Environment setup: define identity and access, network zones, and storage tiers. Add placement policies for bursting between on-prem and cloud.

- Schedulers & CI/CD: connect job schedulers, artifact registries, and pipeline runners to automate builds and runs.

- Language bindings: ensure HiGHS and other tools have matching C/C++/Python/Julia bindings across systems.

- Data lineage: ingest, tag, and curate data with automated provenance and retention rules to support audits and reproducibility.

| Decision Factor | On-Prem | GPU Nodes | Cloud HPCaaS |

|---|---|---|---|

| Governance & Compliance | Strong control, low egress | Moderate (with policies) | Built-in controls (Rescale-like) |

| Workload Predictability | Steady, planned jobs | High-throughput, dense compute | Variable, bursty workloads |

| Operational Overhead | Higher ops burden | Driver & container maintenance | Lower with managed orchestration |

| Integration | Custom toolchains | Requires GPU stack validation | 1,250+ integrations available |

Operations playbook: monitor jobs and costs, set autoscale rules, and run incident drills. Use profiling to tune hardware, right-size nodes, and validate regressions after changes.

“Start small: run representative workloads, validate assumptions, then scale with SLAs and budget guardrails.”

Protect systems with encryption, MFA, and key management. Maintain documentation and change logs so teams retain continuity as technology and personnel change.

High-Performance Software: Final Takeaways and Next Steps

Start with clear workload targets and measure baseline time-to-results before selecting tools. Define acceptance criteria that cover cost, energy use, and performance.

Match needs: open-source optimization for decision models, GPU platforms for dense simulations and AI, and cloud HPC for scale and operational simplicity. Run pilots that validate cost-performance trade-offs and capture lessons learned.

Engage the community, documentation, and vendor ecosystems to reduce risk and speed onboarding. Prioritize interoperability, portability, and governance to future-proof systems and protect data.

Final checklist: workload profiles, integration map, performance targets, budget thresholds, and executive sponsorship. These steps help teams harness computing power to accelerate innovation across a wide range of simulations and solutions.

FAQ

What is the difference between traditional computing and high-performance solutions for simulation and AI?

Traditional systems handle general workloads, but HPC-class platforms focus on parallel processing, low-latency interconnects, and optimized libraries to run large-scale simulations, data analytics, and AI models faster. Those capabilities cut time-to-insight for research, engineering, and industry use cases such as climate modeling, drug discovery, and computational fluid dynamics.

How do I evaluate performance on real-world workloads like modeling and optimization?

Measure end-to-end time-to-solution using your representative datasets and pipelines. Test solver throughput for LP, QP, and MIP problems, profile GPU vs CPU performance, and compare I/O, memory, and network behavior. Look for reproducible benchmarks and vendor tools that mirror your production workloads.

Can GPU-accelerated platforms improve results for engineering simulations and AI?

Yes. GPUs excel at matrix operations and dense linear algebra used in simulations and deep learning. Platforms from NVIDIA and others pair accelerators with optimized libraries and frameworks to speed training, inference, and visualization, while reducing runtime and energy per operation.

What are the main factors when choosing between on-prem, cloud, or hybrid deployments?

Consider data gravity, compliance, latency, and total cost. On-prem gives control and predictable performance; cloud offers elasticity and rapid provisioning; hybrid models balance sensitive workloads on-site with burst capacity in the cloud. Evaluate orchestration, data pipelines, and vendor integrations.

How does energy efficiency factor into architecture and cost decisions?

Energy matters for operational cost and sustainability. Choose architectures that maximize performance per watt, such as modern accelerators and efficient cooling. Measure power draw under realistic workloads and include energy in TCO models to compare options fairly.

What licensing models should I weigh for solvers and platforms?

Licensing ranges from open-source MIT-style options to commercial subscriptions and enterprise support contracts. Open-source tools like HiGHS offer permissive licensing and community contributions, while commercial solutions provide optimized stacks, SLAs, and vendor support that can simplify deployment and compliance.

How important is scalability across CPU, GPU, and cloud environments?

Scalability ensures your workloads run efficiently as data and model size grow. Look for architectures and software that support multi-node MPI, GPU clustering, and cloud elasticity. Seamless portability reduces rework when moving between on-prem and cloud resources.

What security and compliance controls are essential for R&D and regulated industries?

Implement role-based access, encryption at rest and in transit, audit logging, and data governance policies. For regulated fields, verify vendor certifications and compliance frameworks, and ensure toolchains support secure workflows and provenance tracking.

How can cloud-native HPCaaS platforms accelerate engineering and R&D?

HPCaaS providers like Rescale offer orchestration, cost controls, and prebuilt images that remove infrastructure overhead. They let teams provision optimized clusters, run experiments at scale, and integrate simulation data with AI tools to speed iteration and lower administrative burden.

What role does open-source solver software play in optimization workflows?

Open-source solvers provide transparency, extensibility, and low entry cost for LP, QP, and MIP problems. Projects such as HiGHS implement algorithms like dual revised simplex, interior point methods, and branch-and-cut, and offer interfaces for Python, Julia, C/C++, and other languages to integrate into pipelines.

How do vendors like NVIDIA support scientific workflows beyond hardware?

NVIDIA supplies a software stack—libraries, compilers, and SDKs—tuned for GPUs, plus ecosystem partnerships and developer tools. That stack accelerates modeling, visualization, and AI convergence, enabling breakthroughs in climate research, life sciences, and engineering.

What should I include in a deployment playbook for integration and operations?

Define architecture choices, provisioning scripts, monitoring, backup and recovery, and compliance checks. Standardize toolchains, container images, and CI/CD for models and pipelines. Include performance baselines and escalation paths to keep operations predictable.

How do I balance total cost of ownership with time-to-results?

Model both capital and operational expenses, factoring in hardware lifetime, energy, staffing, and software licenses. Quantify the business value of faster results—shorter design cycles, more experiments, and quicker product launches—to justify higher-performance investments when they deliver measurable outcomes.

What are best practices for integrating simulation data with AI?

Use standardized data schemas and metadata for provenance. Apply physics-informed models to combine simulation outputs with machine learning. Automate data pipelines, version datasets, and validate models on holdout scenarios to ensure robust, explainable predictions.