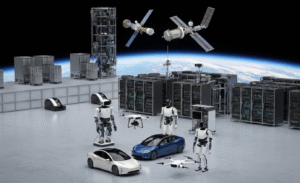

Surprising fact: the market for modern infrastructure is set to double from USD 120 billion to USD 241 billion at a 10.5% CAGR in just seven years.

Today’s infrastructure spans servers, storage, networking, and operating systems that power applications and analytics. These layers let teams deploy software faster, automate routine work, and use cloud platforms like AWS, Microsoft Azure, and IBM to scale on demand.

Good design boosts productivity: resilient systems and sound management cut downtime and speed time-to-market.

Modern stacks also enable real-time decision-making with streaming data and strengthen protection with firewalls, IDS, NGAV, and encryption. The right mix of services balances performance, cost, and risk while supporting compliance and innovation.

Key Takeaways

- Modern architectures are the foundation for digital business and faster application delivery.

- Cloud options add elastic capacity, but value relies on strong management and governance.

- Security and resilience are essential to reduce downtime and protect information.

- Choosing the right platforms affects performance, availability, and cost control.

- Expect faster time-to-market, better data-driven decisions, and predictable service levels.

Foundations of IT Infrastructure in the Modern Enterprise

A modern enterprise relies on layered systems—hardware, platforms, and networks—that jointly deliver business services.

Core components include racks of servers and storage arrays, switches and routers, and the software layers that manage resources. Data center facilities add power, cooling, structured cabling, and physical access controls to protect equipment.

How systems and devices connect

On-premises assets link to carrier-operated backbones using fiber, satellite, and microwave links. ISPs like Verizon and AT&T provide secure tie-ins at colocation sites and data centers, while load balancers and repeaters keep traffic flowing.

Platforms, operating systems, and applications

Operating systems and middleware allocate compute and memory for applications. Virtualization and control planes let organizations scale services and isolate workloads for better performance and security.

Storage and data flows

Storage moves from on-premises data arrays to cloud tiers based on throughput, latency, and durability needs. Clear data mapping helps teams plan backups, replication, and disaster recovery.

Who runs the stack and market drivers

Enterprise teams manage on-site assets, colocation handles facilities, cloud providers supply elastic services, and ISPs deliver transit. Rising cloud adoption and AI workloads drive demand for resilient, easy-to-manage systems.

“Accurate asset records and monitoring are essential to reduce outages and speed recovery.”

Types of IT Infrastructure and Cloud Infrastructure Approaches

Organizations choose a mix of on-site systems and cloud services to match cost, compliance, and performance goals. This section outlines common models and when to pick each.

Traditional on‑premises and data center facilities

On‑premises means company-run data centers with direct control over servers, storage, and hardware. This offers tight governance and low-latency access.

Trade-offs include higher capital expense, longer procurement cycles, and manual operations for patching and upgrades.

Cloud computing models: IaaS, PaaS, SaaS

IaaS delivers elastic compute and storage on-demand. It suits variable workloads that need quick scale.

PaaS provides managed platforms for development and deployment. SaaS offers complete applications without local installs, reducing operational overhead.

Hybrid cloud architectures

Hybrid cloud blends private, public, and on‑prem resources to balance agility and governance.

This model supports steady-state core systems on-site and burst capacity in public clouds, driving cost optimization as the market grows rapidly.

Converged and hyperconverged systems

Converged stacks bundle compute, storage, and network into a tested package. Hyperconverged systems use software-defined controls for tighter integration and easier scaling.

Virtualization, containers, and abstraction

Virtualization creates VMs that let one server host many OS instances, improving resource utilization. Containers increase density and portability for modern apps.

| Approach | Best for | Operational impact |

|---|---|---|

| On‑premises | Regulated workloads, low-latency systems | High capital spend, internal staffing |

| Public cloud (IaaS/PaaS/SaaS) | Variable demand, global services | OpEx model, vendor management |

| Hybrid cloud | Core steady-state + burst capacity | Requires strong networking and governance |

| Hyperconverged | Fast deployment, scale-out simplicity | Vendor lock-in risk, easier automation |

Selection depends on compliance, budget, long-term scale, and the required platforms and services. Consider data placement, network bandwidth, and security controls when you mix environments.

IT infrastructure Management for Availability, Performance, and Scale

To keep services online and fast, teams need unified visibility and automated controls across all resources. A single-pane-of-glass view centralizes information about servers, storage, network paths, and platform health. This makes diagnosis faster and reduces mean time to repair.

Unified monitoring, alerts, and observability

Unified monitoring ties logs, metrics, and traces into one console. Tune alerts to business-impact thresholds and link them to runbooks. That helps teams act on real problems, not noise.

Automation and orchestration

Use automation to react to variable load and to run repeatable ops tasks. Orchestration tools deploy changes, run failovers, and scale services with fewer manual steps. Automation also lowers human error and speeds recovery.

CMDB, asset discovery, and relationship mapping

Maintain a CMDB with automated discovery so you can map dependencies. Visual maps show the blast radius of changes and guide safe rollouts across complex systems.

Lifecycle, capacity, and cost management

Embed lifecycle practices for procurement, patching, upgrades, and retirement. Track consumption and rightsize instances to control costs. Combine capacity planning with regular maintenance to keep performance and security aligned with business goals.

- Goal: ensure availability, performance, and scalability.

- Practice: single-pane tools plus CMDB and automated runbooks.

- Outcome: predictable services, lower costs, and improved security posture.

Infrastructure Security and Resilience by Design

Strong defenses and resilient design keep services running when threats or failures occur.

Start with layered controls. Physical protections—controlled access, surveillance, and hardened facilities—work alongside logical tools like firewalls, IDS/IPS, and next‑generation antivirus. Add comprehensive encryption to protect data in transit and at rest.

Physical and logical controls

Least‑privilege identity and multifactor authentication reduce unauthorized access. Continuous monitoring ties user activity to alerts so teams can act quickly.

Backup, disaster recovery, and high availability

Design backup and disaster recovery to meet recovery time and point objectives. Use high‑performance storage systems and cloud replication to protect critical data and service continuity.

Architect high availability across multiple data centers and cloud regions. Include failover routes, health checks, and redundant network, compute, and storage layers.

Policy, governance, and immutable approaches

Define policies that map controls, logging, and audits to compliance needs and business risk. Adopt immutable approaches where feasible—replace images and nodes rather than patching live systems—to reduce drift and simplify rollback.

- Integrate security telemetry into centralized monitoring to correlate events across facilities and cloud services.

- Run regular game days and simulations to validate recovery and failover plans.

- Balance protection with usability so teams can access data without friction.

Outcome: a practical, repeatable approach that keeps data safe, reduces downtime, and scales as systems evolve.

Optimizing Performance, Networking, and Costs

Optimizing system performance hinges on smarter network paths, faster routing, and clear traffic priorities. Modern stacks cut latency and let teams steer critical applications ahead of background work. Continuous monitoring and reporting make tuning decisions simple and repeatable.

Performance engineering: faster networks, load balancing, and low‑latency paths

Apply performance engineering across the stack by optimizing routing, peering, and DNS. Use intelligent load balancing to shape load and to ensure mission‑critical services stay responsive.

Implement QoS, traffic shaping, and shorter paths for high‑priority flows. Instrument applications and services end to end so you can spot bottlenecks and act quickly.

Cost optimization: right‑sizing compute, storage tiers, and platform choices

Right‑size compute, storage, and bandwidth to match workload patterns. Leverage autoscaling, scheduled scaling, and cloud elasticity to avoid wasted resources.

Use cloud storage tiers and CDN caching so hot data stays fast while archival storage reduces monthly costs. Standardize platforms and adopt tools that show real‑time and historical data for capacity planning.

“Continuous tuning of network and platform choices turns measurable savings into better user experience.”

Conclusion

A clear roadmap that coordinates platforms, storage, and services helps teams move from firefights to strategic work.

Align components—hardware, software, networks, and facilities—with disciplined management to make systems reliable and business‑focused.

Use hybrid cloud and cloud infrastructure where they add agility. Keep accurate inventories of on‑premises data, servers, and storage to reduce risk.

Embrace virtualization, automation, and unified monitoring to scale applications and lower cost. Layered controls and infrastructure security protect critical data across data centers.

Next step: build a phased plan that sequences resource, application, and service upgrades to deliver measurable business outcomes and preserve flexibility for change.

FAQ

What components make up a modern IT setup?

A modern setup combines servers, storage systems, networking hardware, virtualization software, operating systems, and applications. Data centers or cloud platforms host these resources, while tools for monitoring, orchestration, and security keep services available and performant.

How do on-premises systems differ from cloud services like AWS or Azure?

On-premises systems are owned and operated by the organization and run in local data centers or colocation sites. Cloud services such as Amazon Web Services and Microsoft Azure deliver compute, storage, and platform services on demand, shifting capital expenses to operational spending and offering elastic scaling and managed services.

What is hybrid cloud and when should a company use it?

Hybrid cloud blends private, public, and on‑premises resources so workloads move where they run best. Use it when compliance, latency, or legacy systems require local control while other workloads benefit from public cloud scalability and services.

What roles do virtualization and containers play in scalability?

Virtualization abstracts hardware to run multiple virtual machines on a host, improving utilization. Containers package applications and dependencies for consistent deployment and quick scaling. Both accelerate provisioning and support resilient architectures.

How can organizations ensure availability and disaster recovery?

Implement redundancy across sites, use backup and replication to secondary data centers or cloud regions, and design for failover with load balancing and automated recovery. Regular testing of recovery procedures is essential for reliability.

What security controls are critical for protecting systems and data?

Combine physical controls in data centers with logical defenses: firewalls, intrusion detection/prevention, encryption for data at rest and in transit, strong identity and access management, and continuous monitoring and patching to reduce vulnerabilities.

How does observability differ from traditional monitoring?

Observability provides deep insight into system behavior by correlating logs, metrics, and traces, enabling faster root‑cause analysis. Traditional monitoring often focuses on predefined alerts and thresholds rather than holistic diagnostics.

What is converged and hyperconverged infrastructure, and why choose it?

Converged systems package compute, storage, and networking from multiple vendors into a single solution. Hyperconverged merges those layers into a software-defined appliance for simplified management, faster deployment, and lower operational overhead.

How do organizations manage capacity and control costs?

Use capacity planning, right‑sizing of compute and storage tiers, lifecycle management, and automation to scale on demand. Cloud cost tools, reserved instances, and storage tiering also help lower spending while meeting performance needs.

What are common performance optimizations for networks and applications?

Optimize by load balancing, reducing latency with edge or CDN services, using faster network paths, tuning application code and databases, and employing caching. Observability data guides where to prioritize improvements.

Who typically manages the technology stack in enterprises?

Responsibility often rests with operations teams, cloud architects, network and security engineers, and platform owners. Organizations may also partner with cloud providers, colocation facilities, or managed service providers for specialized capabilities.

How do governance and policy reduce operational risk?

Clear policies on configuration, change control, access management, and backup frequency enforce consistent practices. Governance frameworks and automated compliance checks limit human error and help meet regulatory requirements.

What is the role of automation and orchestration in modern environments?

Automation streamlines repetitive tasks like provisioning and patching, while orchestration coordinates multi-step workflows across systems. Together they improve speed, reduce mistakes, and help systems scale to meet demand.

How should organizations approach storage and data flows between on-prem and cloud?

Classify data by sensitivity and access patterns, then map storage tiers accordingly. Use secure replication and synchronization tools to move or mirror data, and ensure encryption and access controls during transit and at rest.

What market trends are driving demand for new platforms and services?

Growth in cloud adoption, edge computing, containerization, and demand for real‑time analytics drive platform innovation. Businesses seek flexible, cost‑effective services that support hybrid models and rapid development cycles.